Case Study: Sphere and the Case of Contextually Related Content.

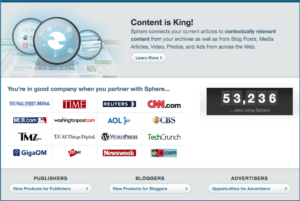

We began working with related content provider Sphere over a decade ago. Sphere was the nexus between thousands of “long-tail” bloggers (E.g. private individuals) and high-volume news sites like CNN, Time and the Wall St. Journal. Personally, I would say that it was easily one of my most exciting opportunities. Working there, you really felt like you had your pulse on current events, because a large part of our job there was assuring that our related content matched the story appropriately.

Ultimately this was a bit trickier than you might expect. Roughly 90% or better of our results were beautifully accurate. We were using Lucene before it was cool to do so, and it was phenomenal at assisting us in determining relevancy. There were, however, some types of corpora that proved difficult to determine contextual relevancy in real-time. Among the culprits, travel articles, articles heavily-laden with slang, articles slim on content were some of the most notorious culprits.

This had actually been a thorn-in-the-side of the company since it’s inception, and all the tuning in the world on the Lucene query generated had no effect.

The solution to this problem was to create MemeFinder.

Thinking outside the virtual sandbox…

So I was thusly tasked with solving this problem, and I was wondering how I would go about it. The primary problem was that the entity extraction was keying on words that were homonyms, but beyond that, it was making what we see as a very common mistake in the search industry: it was focusing on commonality. Now, on the surface level, it makes sense to focus on the common aspects between two text corpora. Term Frequency is an industry standard algorithm, albeit being somewhat arcane, it remains effective in many cases, like its younger brother, BM25. BM25 uses Probabilistic Relevance matching to determine rank. Both of these algorithms — both industry standards — work well for many cases, however there are edge cases where they simply fall short.

So when I began creating MemeFinder, I approached it from the perspective of a linguist, rather than a computer scientist.

Did I mention that I was an English/Sociology major long, long ago? It’s true, and up until I wrote MemeFinder it was my dirty little secret that you just didn’t talk about in technology circles. I’ve actually been writing code since I was a kid — back in the 70’s when most kids didn’t write code — but I ultimately decided to pursue a career studying my native language.

As it turns out, it was one of the best decisions I ever made.

Because it was that ability to think like and English major that allowed me to break the language down into its various components: pronouns, modal auxiliaries, articles, prepositions and so on. Once I had those gathered, for the most part, I discarded them, at least initially. MemeFinder also discards much of the common ontology. There are over 100,000 words in the English language, but only about 10,000 are used on a regular basis. That’s really not a large data set, when you think about it. The problem, though, was the fact that it was English. English is the Borg of languages, absorbing whatever it finds useful, creating words where there were non prior — oh, and people are generally horrible at spelling and writing, etc. They DO however, take note of the important aspects of a document. Thus, the keys, then, were ultimately found in punctuation, grammar and semantics. MemeFinder focused on the differences, rather than the commonality, and generated a ‘memeotype’ from this distinctiveness, which could then be turned into a distinctive Lucene query.

Parsing the document and generating the query only represented part of the solution, though. The other aspects were speed and resource consumption.

That is: it had to be fast and lean.

Sphere processed several hundred million requests per day. There was really no time to spare in parsing documents. MemeFinders architecture is both thread-safe and at the same time largely static. This means that once instantiated, it runs extremely fast, processing an average 600-1000 word article in under 100 milliseconds.

Yet speed and linguistic ninja tricks aside, perhaps its most distinctive aspect was it’s entity extraction, and the manner in which it compared corpora.

The Paradox of Related Content and Diminishing Returns

One thing you might notice about the typical search engine: There is a “sweet spot” of query terms, where too few returns irrelevant documents, and too many returns, generally, nothing too closely related. MemeFinder is different in this aspect because it was designed to compare to bodies of text, rather than a fragment. Thus the more query terms MemeFinder has to work with, the more precise the results.

MemeFinder was put into production at Sphere and solved the contextual relevance issue.